Scientific name is Pycnoporus coccineus. Common on fallen logs and branches

Monthly Archives: July 2016

How the World’s Most Powerful Supercomputer Inched Toward the Exascale

The powerful Sunway TaihuLight supercomputer makes some telling trade-offs in pursuit of efficiency

An Internet of Drones

by Robert J. Hall

AT&T Labs Research

The safe operation of drones for commercial and public use presents communication and computational challenges. This article overviews these challenges and describes a prototype system (the Geocast Air Operations Framework, or GAOF) that addresses them using novel network and software architectures.

The full article can be accessed by subscribers at https://www.computer.org/cms/Computer.org/ComputingNow/issues/2016/07/mic2016030068.pdf

Drones, flying devices lacking a human pilot on-board, have attracted major public attention. Retailers would love to be able to deliver goods using drones to save the costs of trucks and drivers; people want to video themselves doing all sorts of athletic and adventuresome activities; and news agencies would like to send drones to capture video of traffic and other news situations, saving the costs of helicopters and pilots.

Today, both technological and legal factors restrict what can be achieved and what can be allowed safely. For example, the US Federal Aviation Administration (FAA) requires drones to operate within line-of-sight (LOS) of a pilot who’s in control, and also requires drones to be registered.

In this article, I will briefly overview some of the opportunities available to improve public and commercial drone operation. I will also discuss a solution approach embodied in a research prototype, the Geocast Air Operations Framework (GAOF), I am working on in AT&T Laboratories Research. This prototype system has been implemented and tested using simulated drones; aerial field testing with real drones is being planned and will be conducted in accordance with the FAA guidelines. The underlying communications platform, the AT&T Labs Geocast System, 1-3 has been extensively field tested in other (non-drone) domains with Earth bound assets, such as people and cars. The goal of the work is to demonstrate a path toward an improved system for the operation of drones, with the necessary secure command and control among all legitimate stakeholders, including drone operator, FAA, law enforcement, and private property owners and citizens. While today there are drones and drone capabilities that work well with one drone operating in an area using a good communication link, there will be increased challenges when there are tens or hundreds of drones in an area.

Note that some classes of drone use are beyond the scope of this discussion:

• Military drones. The US military has been operating drones for many years and are the acknowledged world experts in the field. However, its usage scenarios are quite different, and many of its technical approaches are out of scope for this discussion, because they have resources and authority that are unavailable (such as military frequency bands) or impractical (high-cost drone designs and components) to use in the public/commercial setting. Instead, we seek solutions whose costs are within reason for public and commercial users and which do not require access to resources unavailable to the public.

• Non-compliant drones. It will always be possible for someone to build and fly drones that do not obey the protocols of our system. For example, we will not discuss defense against drones, such as electromagnetic pulse (EMP) weapons, jamming, or trained birds-of-prey.4 However, we hope to work toward a framework for safe and secure large-scale drone use, analogous to establishing traffic laws for cars.

• Drone application-layer issues. Obviously, drones should actually do something useful once we have gone to the trouble to operate them safely. Often, this takes the form of capturing video or gathering other sensor data. This article does not address the issues involved in transferring large data sets from drone to ground or drone to cloud.

The rest of this article will give background on the communications system underlying the GAOF, the challenges of safe and scalable air operations, and how the GAOF addresses these challenges.

La Reverie 3

On Sunday 23th July 2016, SDAR Symphonic Band performed at Kolej Tuanku Jaafar (KTJ) Mantin. The La Reverie 3 concert is the 3rd in the series performed by the boys. KTJ has a magnificent hall with a very good sound system. The setting is comfortable and the boys can play the instruments at their best.

Since the college is a bit far from the hiway, the concert started a bit late as most of the guests had a bit of a problem finding the place.

The concert was divided into two acts. The first consists of English songs and medleys. The audience was very well entertained with hit songs from the Beatles and ABBA. Not forgetting the wonderful performance of difficult music scores of Around the world in 80 days and the African medley.

The entr’acte lasted for about 15 minutes. While waiting, we went out to stretch our legs. The guests were busy buying drinks and snacks. They also took the opportunity to take photos outside the hall. By the way, we were not supposed to record anything inside the hall during the performance. So the photos here were all taken either before, during entr’acte and after the show.

The guest artist for the night was Nash, formerly from the famous rock group Lefthanded. He sang 3 songs. My favourite was of course – Ku di halaman Rindu 🙂

The second act was better than the first one. The composition of the Malay songs rearranged by En Suhaimi was amazingly beautiful. I have heard TKC WO played Puteri Gunung Ledang (PGL) wonderfully well. In fact I have also heard the music at PGL show itself. But the arrangement of the piece from PGL performed by the SDAR Symphonic Band actually brought tears to my eyes. Lovely.

The performance ended with a medley of hari raya songs. Everyone enjoyed the show very much. Sweet and simple but at the same time memorable.

The guest of honor for the night was Dato’ Johari Salleh. The boys had a nice surprise when he actually insisted to go on the stage and personally said a few words to them. I am sure he enjoyed their performance as much as I do.

The proud father with his saxophonist son.

SDAR band president 2016 with TKC band president 1987 😀

Firdaus mom and I used to study at the same school way back in the 80s. Now these boys are both classmates. It’s a small world after all right?

Hibiscus

2016 IEEE International Conference on Automatic Control and Intelligent Systems (I2CACIS 2016)

Chaos Engineering

by:

Ali Basiri, Niosha Behnam, Ruud de Rooij, Lorin Hochstein, Luke Kosewski, Justin Reynolds, and Casey Rosenthal,

Netflix

This is an excerpt of the article published in the July 2016 edition of Computing Now at https://www.computer.org/cms/Computer.org/ComputingNow/issues/2016/07/mso2016030035.pdf

Modern software-based services are implemented as distributed systems with complex behavior and failure modes. Chaos engineering uses experimentation to ensure system availability. Netflix engineers have developed principles of chaos engineering that describe how to design and run experiments.

THIRTY YEARS AGO, Jim Gray noted that “A way to improve availability is to install proven hardware and software, and then leave it alone.”1 For companies that provide services over the Internet, “leaving it alone” isn’t an option. Such service providers must continually make changes to increase the service’s value, such as adding features and improving performance. At Netflix, engineers push new code into production and modify runtime configuration parameters hundreds of times a day. (For a look at Netflix and its system architecture, see the sidebar.) Availability is still important; a customer who can’t watch a video because of a service outage might not be a customer for long.

But to achieve high availability, we need to apply a different approach than what Gray advocated. For years, Netflix has been running Chaos Monkey, an internal service that randomly selects virtualmachine instances that host our production services and terminates them.2 Chaos Monkey aims to encourage Netflix engineers to design software services that can withstand failures of individual instances. It’s active only during normal working hours so that engineers can respond quickly if a service fails owing to an instance termination.

Chaos Monkey has proven successful; today all Netflix engineers design their services to handle instance failures as a matter of course.

That success encouraged us to extend the approach of injecting failures into the production system to improve reliability. For example, we perform Chaos Kong exercises that simulate the failure of an entire Amazon EC2 (Elastic Compute Cloud) region. We also run Failure Injection Testing (FIT) exercises in which we cause requests between Netflix services to fail and verify that the system degrades gracefully.3 Over time, we realized that these activities share underlying themes that are subtler than simply “break things in production.” We also noticed that organizations such as Amazon,4 Google,4 Microsoft,5 and Facebook6 were applying similar techniques to test their systems’ resilience. We believe that these activities form part of a discipline that’s emerging in our industry; we call this discipline chaos engineering. Specifically, chaos engineering involves experimenting on a distributed system to build confidence in its capability to withstand turbulent conditions in production. These conditions could be anything from a hardware failure, to an unexpected surge in client requests, to a malformed value in a runtime configuration parameter. Our experience has led us to determine principles of chaos engineering (for an overview, see http://principlesofchaos .org), which we elaborate on here.

Thoughts for the day

Salam, Selamat Sejahtera and Eid Mubarak to all UTM staffs,

—

Primary Email : raihan.ak@utm.my

Integrity Enrichment – Disciplinary Action – Surcharge

Ungku-Omar Newton Fund Grant Success

—

Fatal Tesla Self-Driving Car Crash Reminds Us That Robots Aren’t Perfect

This article is obtained from http://spectrum.ieee.org/cars-that-think/transportation/self-driving/fatal-tesla-autopilot-crash-reminds-us-that-robots-arent-perfect?utm_campaign=TechAlert_07-21-16&utm_medium=Email&utm_source=TechAlert&bt_alias=eyJ1c2VySWQiOiAiOWUzYzU2NzUtMDNhYS00YzBjLWIxMTItMWUxMjlkMjZhNjE0In0%3D

On 7 May, a Tesla Model S was involved in a fatal accident in Florida. At the time of the accident, the vehicle was driving itself, using its Autopilot system. The system didn’t stop for a tractor-trailer attempting to turn across a divided highway, and the Tesla collided with the trailer. In a statement, Tesla Motors said this is the “first known fatality in just over 130 million miles [210 million km] where Autopilot was activated” and suggested that this ratio makes the Autopilot safer than an average vehicle. Early this year, Tesla CEO Elon Musk told reporters that the Autopilot system in the Model S was “probably better than a person right now.”

The U.S. National Highway Transportation Safety Administration (NHTSA) has opened a preliminary evaluation into the performance of Autopilot, to determine whether the system worked as it was expected to. For now, we’ll take a closer look at what happened in Florida, how the accident may could have been prevented, and what this could mean for self-driving cars.

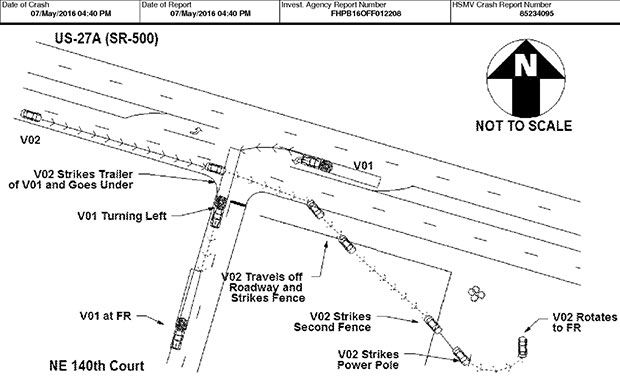

According to an official report of the accident, the crash occurred on a divided highway with a median strip. A tractor-trailer truck in the westbound lane made a left turn onto a side road, making a perpendicular crossing in front of oncoming traffic in the eastbound lane. The driver of the truck didn’t see the Tesla, nor did the self-driving Tesla and its human occupant notice the trailer. The Tesla collided with the truck without the human or the Autopilot system ever applying the brakes. The Tesla passed under the center of the trailer at windshield height and came to rest at the side of the road after hitting a fence and a pole.

Tesla’s statement and a tweet from Elon Musk provide some insight as to why the Autopilot system failed to stop for the trailer. The autopilot relies on cameras and radar to detect and avoid obstacles, and the cameras weren’t able to effectively differentiate “the white side of the tractor trailer against a brightly lit sky.” The radar should not have had any problems detecting the trailer, but according to Musk, “radar tunes out what looks like an overhead road sign to avoid false braking events.”

We don’t know all the details of how the Tesla S’s radar works, but the fact that the radar could likely see underneath the trailer (between its front and rear wheels), coupled with a position that was perpendicular to the road (and mostly stationary) could easily lead to a situation where a computer could reasonably assume that it was looking at an overhead road sign. And most of the time, the computer would be correct.

Tesla’s statement also emphasized that, despite being called “Autopilot,” the system is assistive only and is not intended to assume complete control over the vehicle:

It is important to note that Tesla disables Autopilot by default and requires explicit acknowledgement that the system is new technology and still in a public beta phase before it can be enabled. When drivers activate Autopilot, the acknowledgment box explains, among other things, that Autopilot “is an assist feature that requires you to keep your hands on the steering wheel at all times,” and that “you need to maintain control and responsibility for your vehicle” while using it. Additionally, every time that Autopilot is engaged, the car reminds the driver to “Always keep your hands on the wheel. Be prepared to take over at any time.” The system also makes frequent checks to ensure that the driver’s hands remain on the wheel and provides visual and audible alerts if hands-on is not detected. It then gradually slows down the car until hands-on is detected again.

I don’t believe that it’s Tesla’s intention to blame the driver in this situation, but the issue (and this has been an issue from the beginning) is that it’s not entirely clear whether drivers are supposed to feel like they can rely on the Autopilot or not. I would guess Tesla’s position on this would be that most of the time, yes, you can rely on it, but because Tesla has no idea when you won’tbe able to rely on it, you can’t really rely on it. In other words, the Autopilot works very well under ideal conditions. You shouldn’t use it when conditions are not ideal, but the problem with driving is that conditions can very occasionally turn from ideal to not ideal almost instantly, and the Autopilot can’t predict when this will happen. Again, this is a fundamental issue with any car that has an “assistive” autopilot that asks for a human to remain in the loop, and is why companies like Google have made their explicit goal to remove human drivers from the loop entirely.

The fact that this kind of accident has happened once means that there is a reasonable chance that it, or something very much like it, could happen again. Tesla will need to address this, of course, although this particular situation also suggests ways in which vehicle safety in general could be enhanced.

Here are a few ways in which this accident scenario could be addressed, both by Tesla itself, and by lawmakers more generally:

A Tesla Software Fix: It’s possible that Tesla’s Autopilot software could be changed to more reliably differentiate between trailers and overhead road signs, if it turns out that that was the issue. There may be a bug in the software, or it could be calibrated too heavily in favor of minimizing false braking events.

A Tesla Hardware Fix: There are some common lighting conditions in which cameras do very poorly (wet roads, reflective surfaces, or low sun angles), and the resolution of radar is relatively low. Almost every other self-driving car with a goal of sophisticated autonomy uses LIDAR to fill this kind of sensor gap, since LIDAR provides high resolution data out to a distance of several hundred meters with much higher resiliency to ambient lighting effects. Elon Musk doesn’t believe that LIDAR is necessary for autonomous cars, however:

For full autonomy you’d really want to have a more comprehensive sensor suite and computer systems that are fail proof.

That said, I don’t think you need LIDAR. I think you can do this all with passive optical and then with maybe one forward RADAR… if you are driving fast into rain or snow or dust. I think that completely solves it without the use of LIDAR. I’m not a big fan of LIDAR, I don’t think it makes sense in this context.

Musk may be right, but again, almost every other self-driving car uses LIDAR. Virtually every other company trying to make autonomy work has agreed that the kind of data that LIDAR can provide is necessary and unique, and it does seem like it might have prevented this particular accident, and could prevent accidents like it.

Vehicle-to-Vehicle Communication: The NHTSA is currently studying vehicle-to-vehicle (V2V) communication technology, which would allow vehicles “to communicate important safety and mobility information to one another that can help save lives, prevent injuries, ease traffic congestion, and improve the environment.” If (or hopefully when) vehicles are able to tell all other vehicles around them exactly where they are and where they’re going, accidents like these will become much less frequent.

Side Guards on Trailers: The U.S. has relatively weak safety regulations regarding trailer impact safety systems. Trailers are required to have rear underride guards, but compared with other countries (like Canada), the strength requirements are low. The U.S. does not require side underride guards. Europe does, but they’re designed to protect pedestrians and bicyclists, not passenger vehicles. An IIHS analysis of fatal crashes involving passenger cars and trucks found that “88 percent involving the side of the large truck… produced underride,” where the vehicle passes under the truck. This bypasses almost all front-impact safety systems on the passenger vehicle, and as Tesla points out, “had the Model S impacted the front or rear of the trailer, even at high speed, its advanced crash safety system would likely have prevented serious injury as it has in numerous other similar incidents.”

If Tesla comes up with a software fix, which seems like the most likely scenario, all other Tesla Autopilot systems will immediately benefit from improved safety. This is one of the major advantages of autonomous cars in general: accidents are inevitable, but unlike with humans, each kind of accident only has to happen once. Once a software fix has been deployed, no Tesla autopilot will make this same mistake ever again. Similar mistakes are possible, but as Tesla says, “as more real-world miles accumulate and the software logic accounts for increasingly rare events, the probability of injury will keep decreasing.”

The near infinite variability of driving on real-world roads full of unpredictable humans means that it’s unrealistic to think that the probability of injury while driving, even if your car is fully autonomous, will ever reach zero. But the point is that autonomous cars, and cars with assistive autonomy, are already much safer than cars driven by humans without the aid of technology. This is Tesla’s first Autopilot-related fatality in 130 million miles [210 million km]: humans in the U.S. experience a driving fatality on average every 90 million miles [145 million km], and in the rest of the world, it’s every 60 million miles [100 million km]. It’s already far safer to have these systems working for us, and they’re only going to get better at what they do.