How to make the penetration of the virtual surface more logical and more realistic through visual feedback?

To answer this question, we tried three methods—highlighting the boundaries and depths as they traverse the grid, adding color gradients to the fingertips as they approach interactive objects and UI elements, and unpredictable grabs. Make a responsive prompt. But first let’s look at how Leap Motion’s interaction engine handles the interaction between hands and objects.

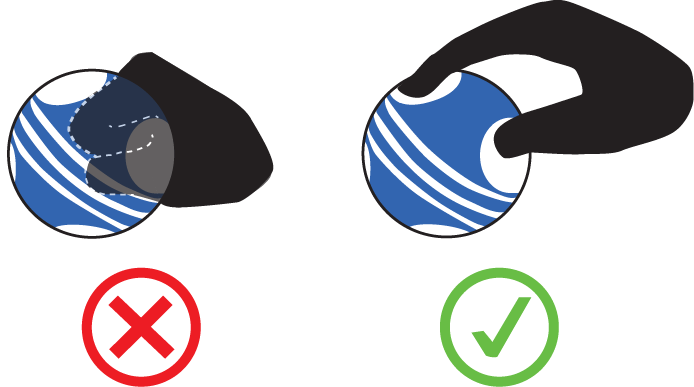

In a virtual world, this type of visual cropping occurs whether you touch a stationary surface (such as a wall) or touch an interactive object. The two core functions of the Leap Motion libraries—flip and grab—have almost always the case where the user’s hand penetrates the interactive object.

Similarly, when interacting with a physics-based user interface (such as the InteractionButtons of the Leap Motion interaction engine, which is reduced in Z space), because the elements of the UI reach the end of their travel distance, the fingertips still have a little bit through Interactive object.

Experiment #1: Highlighting the boundary and depth while crossing the grid

In our first experiment, we proposed that when the hand intersects with other meshes, the boundary should be visible. The shallow part of the hand is still visible, but the color or transparency of the hand changes.

To achieve this, we applied a shader to our hand grid. We measure the distance of each pixel on the hand from the camera and compare it to the depth of the scene (from the depth texture to the camera reading). If the two values are relatively close, we cause the pixels to illuminate and increase the illuminance as we get closer.

When the intensity and depth of illumination are reduced to a minimum, it seems to be an effect that can be applied universally in an application without appearing particularly eye-catching.

Lab #2: Add a color gradient to your fingertips when approaching interactive objects and UI elements

In the second experiment, we decided to change the color of the fingertips to match the surface color of the object we want to interact with. The closer the hand is to the touch object, the closer the colors are. This will help the user to more easily determine the distance between the fingertip and the surface of the object while reducing the likelihood that the fingertip will penetrate the surface. In addition, even if the fingertip does penetrate the mesh, the resulting visual cropping will not be as awkward – because the fingertip and the object surface will be the same color.

Whenever we hover over an InteractionObject, we check the distance from each fingertip to the surface of the object. Then, we use this data to drive a gradient change that affects the color of each fingertip independently.

This experiment really helps us to more accurately determine the distance between our fingertips and the surface of the object. In addition, it makes it easier for us to know the closest contact we have. Combine it with the effect of Experiment #1 to make the various phases of the interaction (close, contact, intersect, grip) clearer.

Lab #3: Responsive prompting for unpredictable crawls

How to capture virtual objects in VR? You may catch a fist, or pinch it, or fasten the object. Previously, we have tried to design some tips—for example, the handles—and hopefully these instructions will guide the user how to grab objects.

By creating a Raycast on each finger joint and checking its projection position on the InteractionObject, we create a shallow socket grid at the hit point of the ray projection. We align the shallow fossa with the hit point normal and use Raycast’s hit distance – mainly the depth of the finger inside the object – to drive the Blendshape to extend the dimple.

From this concept, we further divergence. Do you want to predict the proximity of your hand before your hand touches the surface of the object, and reflect it? To do this, we increase the length of the fingertip ray projection so that the hit is registered before your finger touches the surface. Then, we create a two-part prefab consisting of a (1) circular mesh and (2) a cylindrical mesh with a depth mask that stops rendering any pixels behind it.

We also tried adding a fingertip color gradient. But this time, the gradient is not driven by the proximity of the object, but by the depth of the finger into the object.

By setting the layer so that the depth mask does not render the mesh of the InteractionObject, but instead renders the user’s hand mesh. These effects make the crawler feel more coherent, as if our fingers were invited to cross the grid. Obviously, this approach requires a more complex system to handle objects outside the sphere – for the palm of the hand, and when the fingers are close together.