Coexisting with AI holograms

AI Holographic technology has risen to new heights recently, with the Hypervsn SmartV Digital Avatar being released at the start of the year. The AI hologram functions on the SmartV Window Display, a gesture-based 3D display and merchandising system, allowing real-time customer interaction.

Universiti Teknologi Malaysia (UTM) has also developed its first home-grown real-time holo professor, which can project a speech given by a lecturer in another place. With Malaysia breaking boundaries with extended reality (XR) technology, can the next wave of hologram technology be fully AI-powered without constraints?

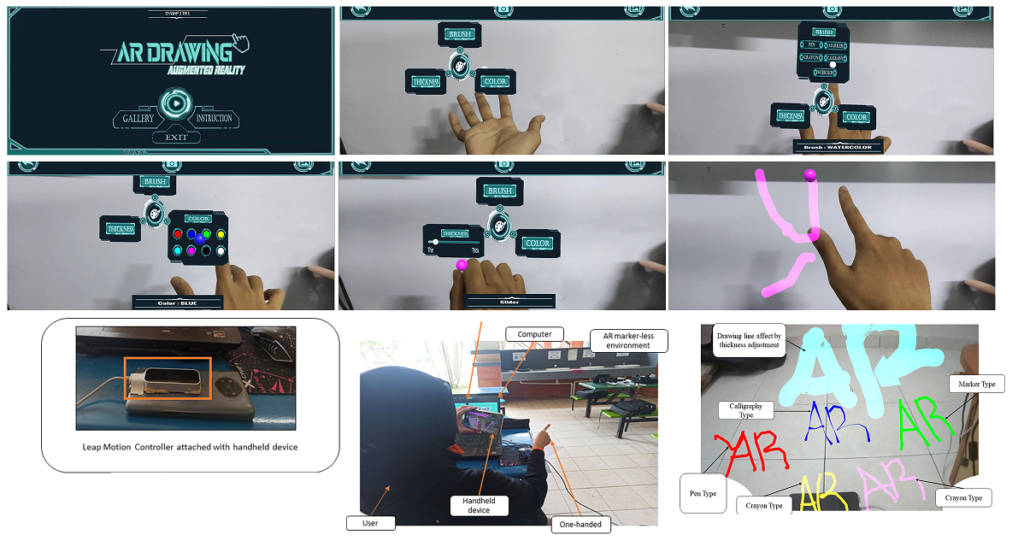

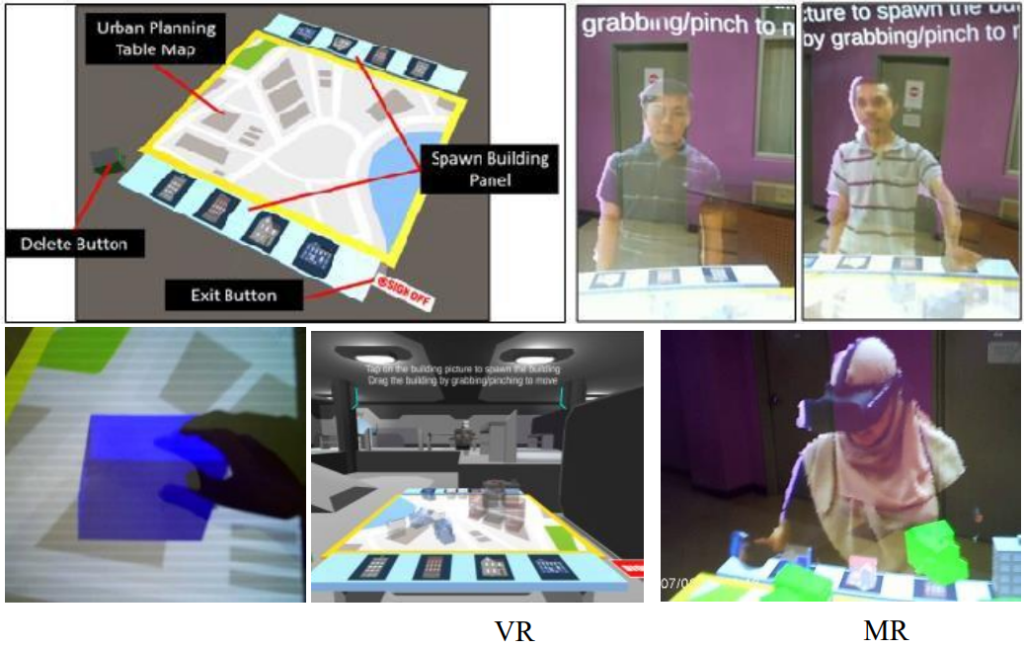

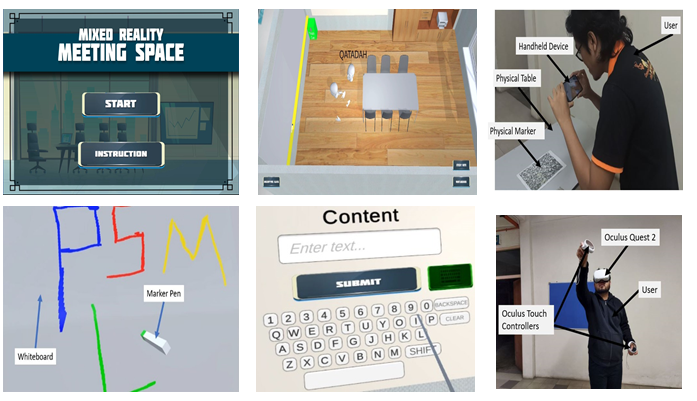

Having created Malaysia’s first home-grown holo professor, Dr Ajune Wanis Ismail, senior lecturer in computer graphics and computer vision at UTM’s Faculty of Computing, shares that XR hologram systems can be complex to set up and maintain. Technical issues, such as connectivity problems or software glitches, could disrupt lessons.

AI algorithms are used to enhance the accuracy of holographic content, reducing artifacts and improving image quality. These holographic solutions in extended reality (XR) technology come as a challenge as the technology is relatively new and is rapidly evolving with new breakthroughs occurring since then.

“Building and deploying AI-powered holographic systems can be costly [in terms of hardware and software components].”

Incorporating AI into holograms could pose an immense demand on computational power. Most of the existing holograms produce non real-time content with a video editing loop, but AI models for holography are computationally intensive, says Ajune.

She emphasises the importance of achieving high-fidelity reconstruction in handling complex dynamic scenes with objects or viewers in motion.

“Researchers are developing more efficient algorithms and leveraging hardware acceleration [such as graphics processing units] to reduce computational demands,” says Ajune on how achieving real-time interaction with holographic content demands low latency.

There is no doubt that XR holograms systems are complicated and a challenge to integrate with AI, however, the prospect of being able to replicate environments and enable real-time global communication without the need for physical presence spurs excitement.

As we advance into the era of digitalisation, people need to start familiarising themselves with this technology and become proficient users, believes Ajune.

Read more >> https://theedgemalaysia.com/node/689700

This article first appeared in Digital Edge, The Edge Malaysia Weekly on November 13, 2023 – November 19, 2023

https://www.theedgesingapore.com/digitaledge/focus/coexisting-ai-holograms

This article first appeared in The Edge Malaysia. It has been edited for clarity and length by The Edge Singapore.