This article is obtained from http://spectrum.ieee.org/cars-that-think/transportation/self-driving/fatal-tesla-autopilot-crash-reminds-us-that-robots-arent-perfect?utm_campaign=TechAlert_07-21-16&utm_medium=Email&utm_source=TechAlert&bt_alias=eyJ1c2VySWQiOiAiOWUzYzU2NzUtMDNhYS00YzBjLWIxMTItMWUxMjlkMjZhNjE0In0%3D

On 7 May, a Tesla Model S was involved in a fatal accident in Florida. At the time of the accident, the vehicle was driving itself, using its Autopilot system. The system didn’t stop for a tractor-trailer attempting to turn across a divided highway, and the Tesla collided with the trailer. In a statement, Tesla Motors said this is the “first known fatality in just over 130 million miles [210 million km] where Autopilot was activated” and suggested that this ratio makes the Autopilot safer than an average vehicle. Early this year, Tesla CEO Elon Musk told reporters that the Autopilot system in the Model S was “probably better than a person right now.”

The U.S. National Highway Transportation Safety Administration (NHTSA) has opened a preliminary evaluation into the performance of Autopilot, to determine whether the system worked as it was expected to. For now, we’ll take a closer look at what happened in Florida, how the accident may could have been prevented, and what this could mean for self-driving cars.

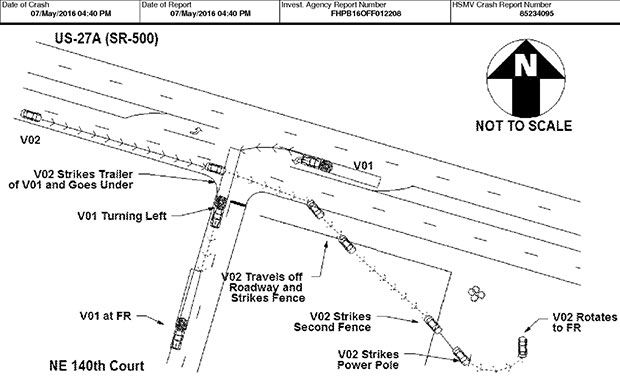

According to an official report of the accident, the crash occurred on a divided highway with a median strip. A tractor-trailer truck in the westbound lane made a left turn onto a side road, making a perpendicular crossing in front of oncoming traffic in the eastbound lane. The driver of the truck didn’t see the Tesla, nor did the self-driving Tesla and its human occupant notice the trailer. The Tesla collided with the truck without the human or the Autopilot system ever applying the brakes. The Tesla passed under the center of the trailer at windshield height and came to rest at the side of the road after hitting a fence and a pole.

Tesla’s statement and a tweet from Elon Musk provide some insight as to why the Autopilot system failed to stop for the trailer. The autopilot relies on cameras and radar to detect and avoid obstacles, and the cameras weren’t able to effectively differentiate “the white side of the tractor trailer against a brightly lit sky.” The radar should not have had any problems detecting the trailer, but according to Musk, “radar tunes out what looks like an overhead road sign to avoid false braking events.”

We don’t know all the details of how the Tesla S’s radar works, but the fact that the radar could likely see underneath the trailer (between its front and rear wheels), coupled with a position that was perpendicular to the road (and mostly stationary) could easily lead to a situation where a computer could reasonably assume that it was looking at an overhead road sign. And most of the time, the computer would be correct.

Tesla’s statement also emphasized that, despite being called “Autopilot,” the system is assistive only and is not intended to assume complete control over the vehicle:

It is important to note that Tesla disables Autopilot by default and requires explicit acknowledgement that the system is new technology and still in a public beta phase before it can be enabled. When drivers activate Autopilot, the acknowledgment box explains, among other things, that Autopilot “is an assist feature that requires you to keep your hands on the steering wheel at all times,” and that “you need to maintain control and responsibility for your vehicle” while using it. Additionally, every time that Autopilot is engaged, the car reminds the driver to “Always keep your hands on the wheel. Be prepared to take over at any time.” The system also makes frequent checks to ensure that the driver’s hands remain on the wheel and provides visual and audible alerts if hands-on is not detected. It then gradually slows down the car until hands-on is detected again.

I don’t believe that it’s Tesla’s intention to blame the driver in this situation, but the issue (and this has been an issue from the beginning) is that it’s not entirely clear whether drivers are supposed to feel like they can rely on the Autopilot or not. I would guess Tesla’s position on this would be that most of the time, yes, you can rely on it, but because Tesla has no idea when you won’tbe able to rely on it, you can’t really rely on it. In other words, the Autopilot works very well under ideal conditions. You shouldn’t use it when conditions are not ideal, but the problem with driving is that conditions can very occasionally turn from ideal to not ideal almost instantly, and the Autopilot can’t predict when this will happen. Again, this is a fundamental issue with any car that has an “assistive” autopilot that asks for a human to remain in the loop, and is why companies like Google have made their explicit goal to remove human drivers from the loop entirely.

The fact that this kind of accident has happened once means that there is a reasonable chance that it, or something very much like it, could happen again. Tesla will need to address this, of course, although this particular situation also suggests ways in which vehicle safety in general could be enhanced.

Here are a few ways in which this accident scenario could be addressed, both by Tesla itself, and by lawmakers more generally:

A Tesla Software Fix: It’s possible that Tesla’s Autopilot software could be changed to more reliably differentiate between trailers and overhead road signs, if it turns out that that was the issue. There may be a bug in the software, or it could be calibrated too heavily in favor of minimizing false braking events.

A Tesla Hardware Fix: There are some common lighting conditions in which cameras do very poorly (wet roads, reflective surfaces, or low sun angles), and the resolution of radar is relatively low. Almost every other self-driving car with a goal of sophisticated autonomy uses LIDAR to fill this kind of sensor gap, since LIDAR provides high resolution data out to a distance of several hundred meters with much higher resiliency to ambient lighting effects. Elon Musk doesn’t believe that LIDAR is necessary for autonomous cars, however:

For full autonomy you’d really want to have a more comprehensive sensor suite and computer systems that are fail proof.

That said, I don’t think you need LIDAR. I think you can do this all with passive optical and then with maybe one forward RADAR… if you are driving fast into rain or snow or dust. I think that completely solves it without the use of LIDAR. I’m not a big fan of LIDAR, I don’t think it makes sense in this context.

Musk may be right, but again, almost every other self-driving car uses LIDAR. Virtually every other company trying to make autonomy work has agreed that the kind of data that LIDAR can provide is necessary and unique, and it does seem like it might have prevented this particular accident, and could prevent accidents like it.

Vehicle-to-Vehicle Communication: The NHTSA is currently studying vehicle-to-vehicle (V2V) communication technology, which would allow vehicles “to communicate important safety and mobility information to one another that can help save lives, prevent injuries, ease traffic congestion, and improve the environment.” If (or hopefully when) vehicles are able to tell all other vehicles around them exactly where they are and where they’re going, accidents like these will become much less frequent.

Side Guards on Trailers: The U.S. has relatively weak safety regulations regarding trailer impact safety systems. Trailers are required to have rear underride guards, but compared with other countries (like Canada), the strength requirements are low. The U.S. does not require side underride guards. Europe does, but they’re designed to protect pedestrians and bicyclists, not passenger vehicles. An IIHS analysis of fatal crashes involving passenger cars and trucks found that “88 percent involving the side of the large truck… produced underride,” where the vehicle passes under the truck. This bypasses almost all front-impact safety systems on the passenger vehicle, and as Tesla points out, “had the Model S impacted the front or rear of the trailer, even at high speed, its advanced crash safety system would likely have prevented serious injury as it has in numerous other similar incidents.”

If Tesla comes up with a software fix, which seems like the most likely scenario, all other Tesla Autopilot systems will immediately benefit from improved safety. This is one of the major advantages of autonomous cars in general: accidents are inevitable, but unlike with humans, each kind of accident only has to happen once. Once a software fix has been deployed, no Tesla autopilot will make this same mistake ever again. Similar mistakes are possible, but as Tesla says, “as more real-world miles accumulate and the software logic accounts for increasingly rare events, the probability of injury will keep decreasing.”

The near infinite variability of driving on real-world roads full of unpredictable humans means that it’s unrealistic to think that the probability of injury while driving, even if your car is fully autonomous, will ever reach zero. But the point is that autonomous cars, and cars with assistive autonomy, are already much safer than cars driven by humans without the aid of technology. This is Tesla’s first Autopilot-related fatality in 130 million miles [210 million km]: humans in the U.S. experience a driving fatality on average every 90 million miles [145 million km], and in the rest of the world, it’s every 60 million miles [100 million km]. It’s already far safer to have these systems working for us, and they’re only going to get better at what they do.