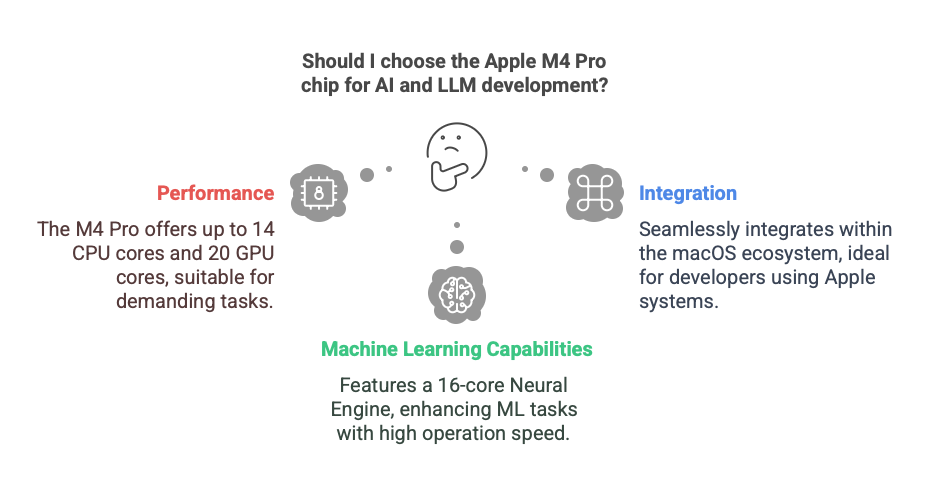

In the rapidly evolving landscape of artificial intelligence (AI) and large language model (LLM) development, selecting the right hardware is paramount. Apple’s introduction of the M4 Pro chip offers a compelling option for developers seeking a balance between performance and integration within the macOS ecosystem.

Apple M4 Pro Chip: A Leap in Performance

The M4 Pro chip represents a significant advancement in Apple’s silicon lineup. Fabricated using TSMC’s enhanced 3-nanometer process, it boasts up to 14 CPU cores—comprising 10 performance and 4 efficiency cores—and up to 20 GPU cores. This configuration is designed to handle demanding computational tasks efficiently. Notably, the 16-core Neural Engine enhances machine learning capabilities, performing up to 38 trillion operations per second, more than doubling the performance of its predecessor, the M3.

Unified Memory Architecture: Enhancing AI Workflows

A standout feature of the M4 Pro is its unified memory architecture, supporting up to 64GB of LPDDR5X RAM with a bandwidth of 273GB/s. This design allows the CPU, GPU, and Neural Engine to access the same memory pool, reducing latency and accelerating data-intensive processes—a crucial advantage for AI and LLM tasks.

macOS Integration: A Seamless Development Experience

Running on macOS, devices equipped with the M4 Pro chip provide a cohesive environment for development. The operating system’s stability and integration with Apple’s hardware optimize performance and user experience. Developers benefit from native support for popular AI frameworks like TensorFlow and PyTorch, which have been updated to leverage Apple Silicon’s capabilities. However, it’s important to note that some specialized libraries relying on NVIDIA’s CUDA may not be fully compatible, necessitating alternative solutions or workarounds.

Considerations for AI and LLM Development

For developers with a budget around RM10,000, configurations featuring the M4 Pro chip present a viable option. These setups offer robust performance suitable for a range of AI applications, from model training to real-time inference. However, it’s essential to assess the specific requirements of your projects. If your work heavily depends on CUDA-accelerated processes, exploring systems with dedicated NVIDIA GPUs might be more appropriate.

Conclusion

Apple’s M4 Pro chip signifies a noteworthy progression in the realm of AI development hardware. Its advanced architecture, unified memory, and seamless integration with macOS make it a strong contender for developers seeking efficient and powerful tools within the Apple ecosystem. As with any technology investment, aligning your hardware choices with your project’s specific needs and compatibility considerations is crucial for optimal outcomes.