View

The Evolving Landscape of GIS Software Systems: From Command Lines to the Cloud, AI & Beyond

View

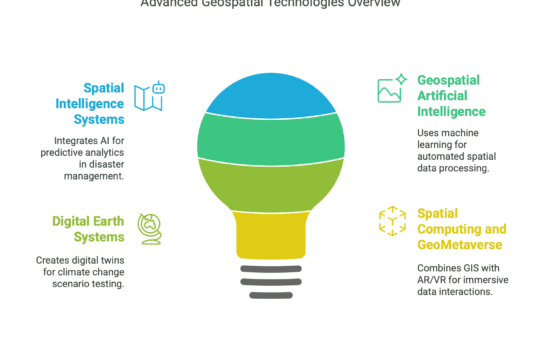

The Future of Geographic Information Systems

View

Sejarah dan Evolusi Sistem Koordinat di Malaysia

View

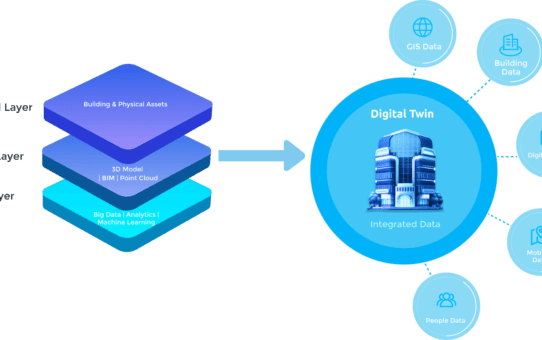

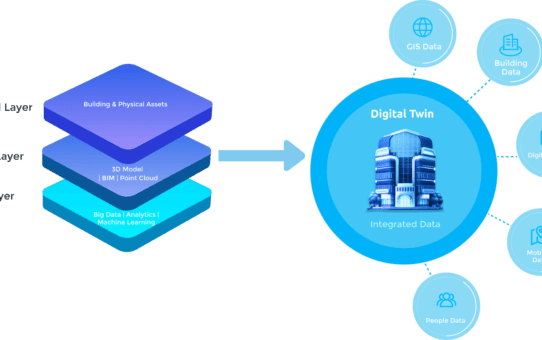

Advancing Digital Twins and GIS Integration

View

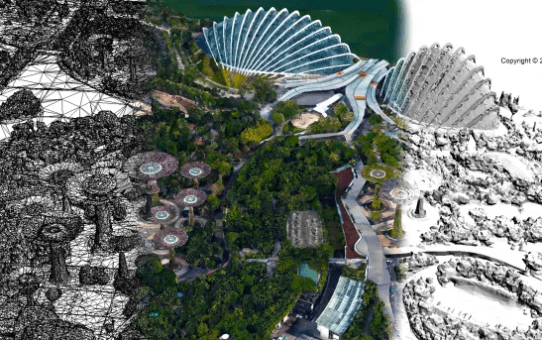

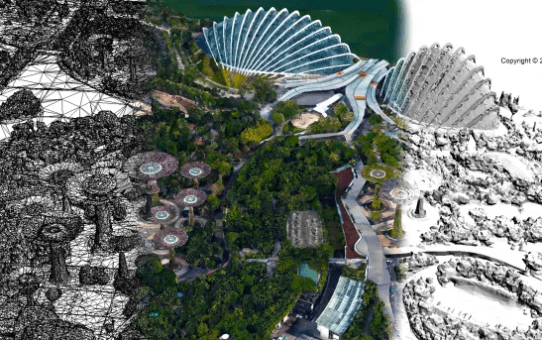

Singapore’s Country-Scale Digital Twin A Revolutionary Model for Smart Cities

View

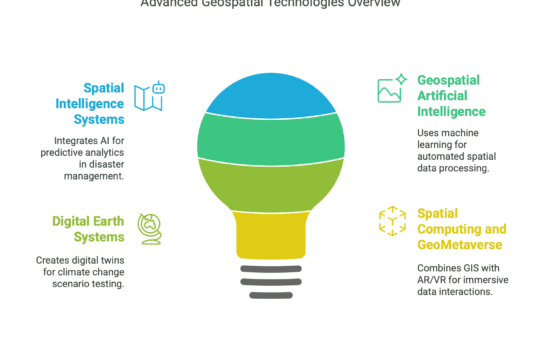

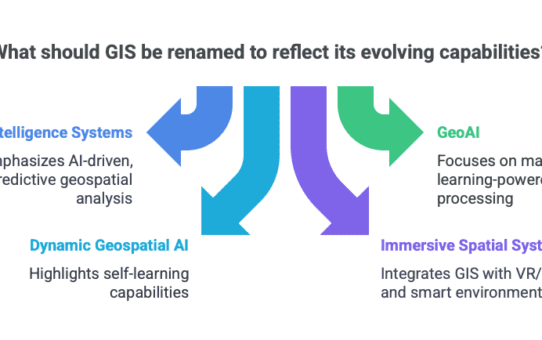

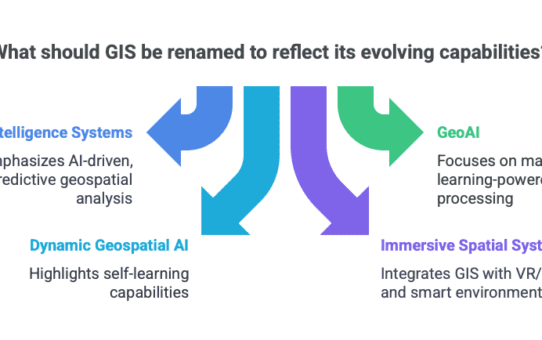

The Future of GIS and the Evolution of Emerging Concepts and Terminology

View

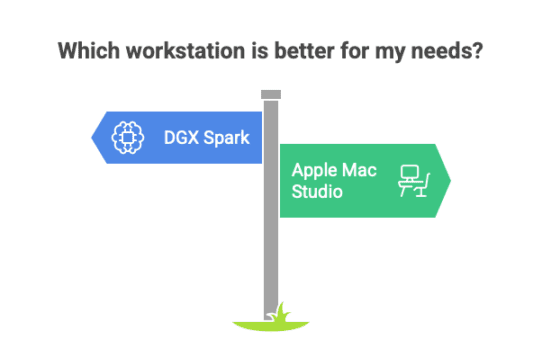

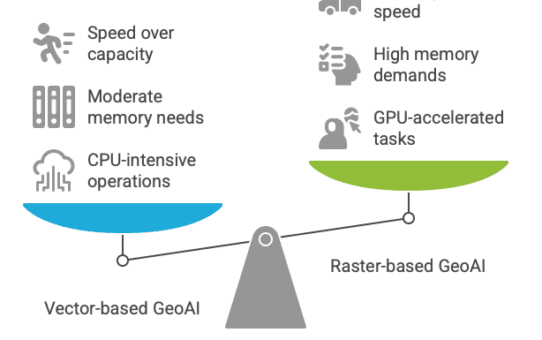

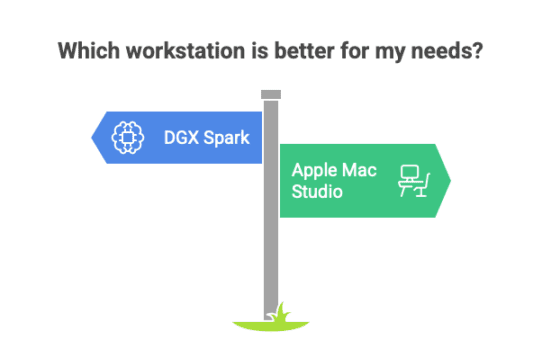

NVIDIA DGX Spark vs. Apple Mac Studio: Which AI Workstation Reigns Supreme?

View

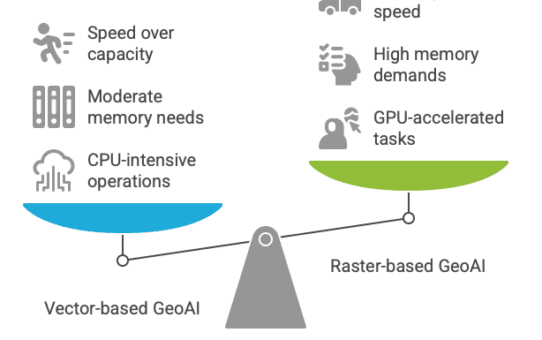

Optimizing Hardware for Vector-Based GeoAI

View

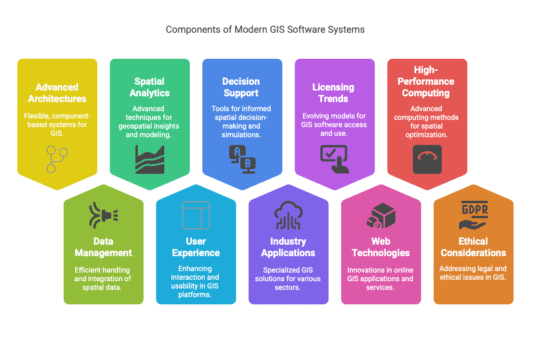

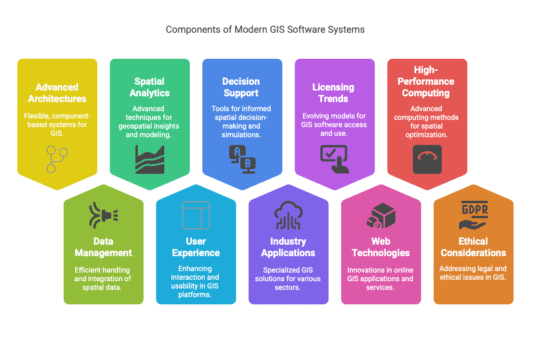

Advancing Modern GIS Software Systems: Key Technologies, Innovations, and Future Directions

View

Apple’s M4 Pro: A Game-Changer for AI and Machine Learning Professionals

View

Development of a Web-Based Application for Managing Student Final Year Projects Repository

View

Development of a Student Absence Submission System