By Shahabuddin Amerudin

In the rapidly evolving field of Geospatial Artificial Intelligence (GeoAI), hardware decisions can make or break a project’s feasibility and efficiency. As GeoAI applications increasingly demand processing of massive satellite imagery datasets and deployment of complex machine learning models, the constraints of underpowered hardware become painfully apparent. This article examines the critical hardware requirements for GeoAI workloads and provides recommendations for building a capable system.

Understanding GeoAI’s Unique Hardware Requirements

GeoAI sits at the intersection of Geographic Information Systems (GIS) and Artificial Intelligence (AI) —combining spatial data analysis with deep learning to extract insights from geographic data. This combination creates unique computational demands that differ from standard AI workloads:

- Data Volume: Satellite imagery and remote sensing data are exceptionally large, with a single multispectral scene often exceeding 10GB when uncompressed

- Multidimensional Analysis: GeoAI typically involves processing both spatial and temporal dimensions simultaneously

- Mixed Workloads: Projects commonly require database operations, model training, and visualization services running concurrently

These characteristics create specific requirements across all hardware components.

Memory: The Foundation of GeoAI Computing

The Case for High-Capacity RAM

Consider a typical workflow processing Sentinel-2 multispectral imagery:

A single Sentinel-2 scene covering 100km × 100km with 13 spectral bands requires 5-10GB of memory when fully loaded and uncompressed. When performing operations like atmospheric correction, calculating vegetation indices, or running classification algorithms, memory requirements multiply rapidly.

With limited RAM, practitioners face a difficult choice:

- Process data in smaller geographic tiles, creating edge artifacts and inconsistencies

- Work with fewer spectral bands, sacrificing valuable information

- Reduce temporal resolution, losing the ability to detect subtle changes

- Constantly shuttle data between RAM and storage, causing significant performance penalties

Recommendation for Memory

Minimum Viable: 64GB unified memory

Recommended: 128GB unified memory

Optimal for Large Projects: 192GB+ unified memory

For training deep learning models with larger batch sizes and running multiple concurrent services, 128GB represents the sweet spot for most GeoAI workloads. This configuration enables batch sizes of 64+ for model training, which provides 2-3x faster training times and often better model accuracy.

CPU Requirements: Core Count vs. Clock Speed

CPU Workloads in GeoAI

GeoAI workloads involve a mix of:

- Highly parallelizable tasks (tile processing, batch prediction)

- Sequential processing tasks (data loading, preprocessing)

- Mixed workflows (database operations, spatial algorithms)

Recommendation for CPU

Minimum Viable: 10-core CPU

Recommended: 14-16 core CPU

Optimal for Parallel Processing: 24+ core CPU

The Apple M4 Max with 16-core CPU provides an excellent balance for GeoAI work. The extra cores (compared to the 14-core variant) provide significant advantages for:

- Parallel image processing operations

- Running multiple concurrent services (database, web server, model inference)

- Preprocessing multiple datasets simultaneously

For server applications running 24/7, the higher core count helps maintain responsive performance even under sustained load from multiple users or services.

GPU: Accelerating Deep Learning and Image Processing

GPU Workloads in GeoAI

GPUs accelerate:

- Deep learning model training and inference

- Many image processing operations (filtering, transformations)

- Some vector operations in spatial analysis

Recommendation for GPU

Minimum Viable: 20-core GPU

Recommended: 32-40 core GPU

Optimal for Complex Models: 60+ core GPU

The 40-core GPU in the higher-end M4 Max configuration provides substantial benefits:

- Up to 2x faster training for CNN-based models like U-Net

- Ability to train larger model architectures (transformers, complex segmentation networks)

- Faster inference for production deployment

- Accelerated image processing operations

While the 32-core GPU is adequate for many tasks, the 40-core GPU future-proofs the system for more complex models and larger datasets.

Storage: Size, Speed, and Endurance

Storage Workloads in GeoAI

GeoAI creates unique storage demands:

- Large raw datasets (satellite imagery collections can reach TB scale)

- High I/O patterns during processing

- Frequent read/write cycles during data preparation

- Need for both high capacity and high speed

Recommendation for Storage

Minimum Viable: 1TB SSD

Recommended: 2TB SSD

Optimal for Large Projects: 4TB+ SSD

Beyond just capacity, speed and endurance are critical:

- NVMe storage provides essential speed for data loading operations

- High write endurance is important for preprocessing workflows

- Consider external storage solutions for archival data

For the Mac Studio, while the base 1TB SSD is sufficient to start, upgrading to 2TB provides valuable headroom for:

- Working with multiple concurrent projects

- Maintaining local copies of frequently used datasets

- Storing intermediate results without constant external transfers

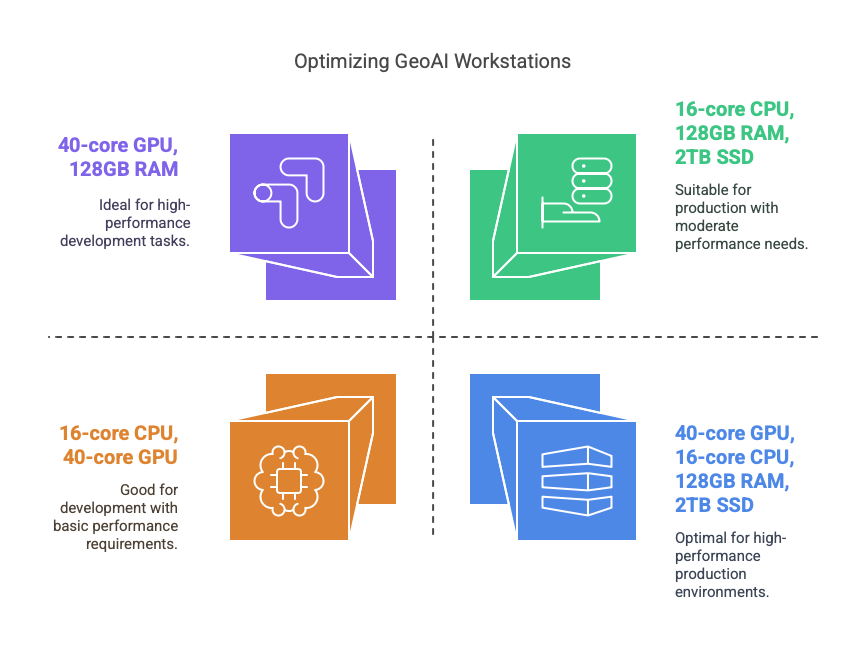

Balancing Hardware Components for Different GeoAI Use Cases

Research & Model Development Focus

If your primary goal is developing and training models:

- Priority: GPU cores and memory

- Recommendation: M4 Max with 40-core GPU and 128GB RAM

Production Server Focus

If your system will primarily serve as a 24/7 deployment platform:

- Priority: CPU cores, memory, and storage

- Recommendation: M4 Max with 16-core CPU, 128GB RAM, 2TB SSD

Balanced Workstation

For a system that handles both development and production:

- Recommendation: M4 Max with 16-core CPU, 40-core GPU, 128GB RAM, 2TB SSD

The Economics of Hardware Investment for GeoAI

When evaluating hardware for GeoAI work, especially on platforms like Apple Silicon where post-purchase upgrades are impossible, the initial investment should be weighed against:

- Productive Lifespan: A higher-spec machine typically remains viable for 5-7+ years versus 3-4 years for lower specifications

- Time Efficiency: Faster processing and the ability to handle larger datasets without workarounds directly translates to researcher productivity

- Capability Expansion: Some analyses simply cannot be performed under severe hardware constraints

- Opportunity Cost: For professionals, the inability to take on certain projects due to hardware limitations represents lost revenue potential

Time Series Analysis: Hardware Requirements in Practice

One of the most powerful applications of GeoAI is analyzing changes over time. Consider monitoring land use changes or agricultural patterns over a five-year period:

Processing 5 years of monthly Sentinel-2 imagery for a medium-sized watershed involves:

- 60 timestamps × 5-10GB per image = 300-600GB total data

- Complex temporal algorithms running across multiple dimensions

- Simultaneous storage, memory, and compute demands

Hardware Impact:

- Memory: 128GB allows holding 20-25% of the time series in memory at once

- CPU: 16 cores enable parallel processing of temporal segments

- GPU: 40 cores accelerate the application of ML models across the time series

- Storage: 2TB provides space for both raw data and processed results

Conclusion: Building the Optimal GeoAI Workstation

The ideal GeoAI workstation balances all hardware components while recognizing that memory often emerges as the critical limiting factor. For serious GeoAI work—whether in research, commercial applications, or public sector—investing in a balanced, high-performance system isn’t an extravagance but a necessity that expands the horizon of possible applications.

Final Recommendation:

- Mac Studio with M4 Max

- 16-core CPU: For reliable parallel processing

- 40-core GPU: For accelerated ML training and inference

- 128GB unified memory: For handling large datasets and complex models

- 2TB SSD storage: For working with multiple projects simultaneously

- RM16,181.50: Education price (Mac 2025)

This configuration provides the optimal balance of components for GeoAI work and ensures the system will remain viable as datasets and models continue to grow in size and complexity over the coming years.

As we look to the future of GeoAI, with increasing resolution of satellite imagery, more complex machine learning architectures, and more ambitious applications, all hardware requirements will continue to grow—making today’s investment in robust computing capabilities a future-proof decision.